Why AI Safety is existentially important, not optional

From virtual assistants that streamline our tasks to intelligent systems that drive innovation, AI's potential seems boundless. Yet, with this immense power comes a profound responsibility. The tragic news about a 14 year old boy who took his own life after prolonged interaction with an AI bot, have highlighted a critical truth: safety and reliability in AI aren’t just optional; they are essential. In 2022, Air Canada's chatbot incorrectly promised a bereavement fare discount to a passenger. This case sets a precedent that companies are responsible for misinformation provided by their AI tools, emphasizing legal accountability in AI-powered customer interactions.Any company with user-facing AI solutions should heed this warning by making safety and reliability their top priorities, like we at Collinear do.

These incidents serve as a wake-up call, emphasizing that the deployment of responsible AI isn't just a regulatory checkbox—it's a moral imperative. Users need to trust that the AI systems they interact with are designed with their well-being in mind. Brands, too, must ensure that their AI reflects their values and safeguards their reputation.

The models available today are undoubtedly powerful, but their out-of-the-box versions are not production ready. This is where safety post-training becomes non-negotiable. Safety post-training ensures that the AI not only understands and processes user inputs effectively but also responds in a way that is consistent with your brand's voice and commitment to user safety. (In this way its not a check box in an SLA, but an intentional and systematic/ scientific way to directly target the issue)

Through this process, the AI learns to navigate a wide range of scenarios appropriately and becomes capable of handling sensitive topics with care, avoiding harmful content, and delivering an overall experience that reinforces trust in your brand.

Our Recommendations:

Safety Post-training: red teaming evals, fine tuning with reinforcement learning to align the model away from undesired behavior and more towards safe and reliable outputs.

Multiple Lines of Moderation: Online and Batch Mode

Implementing multiple layers of moderation enhances the safety and reliability of AI systems. This includes:

Online Moderation (Real-Time): Immediate analysis and filtering of AI outputs as they are generated. This helps prevent the dissemination of inappropriate or harmful content instantly, safeguarding the user experience in real-time interactions. This can also be done in streaming mode without sacrificing latency.

Batch Mode Moderation (Minimal latency): review and analysis of AI interactions in batches by a powerful safety model with a focus on performance at the cost of small latency (1-2 min). This allows for a more in-depth evaluation of the AI's performance over time, identifying patterns or issues that may not be evident in the last few turn exchanges. Once, a red flag is detected, the access is immediately cut-off until reviewed by a human.

By combining both methods, businesses can ensure continuous oversight and improvement of their AI systems.

At Collinear, our mission is to empower our customers to deploy their AI systems in production with confidence with our Safety and reliability pos-training solutions

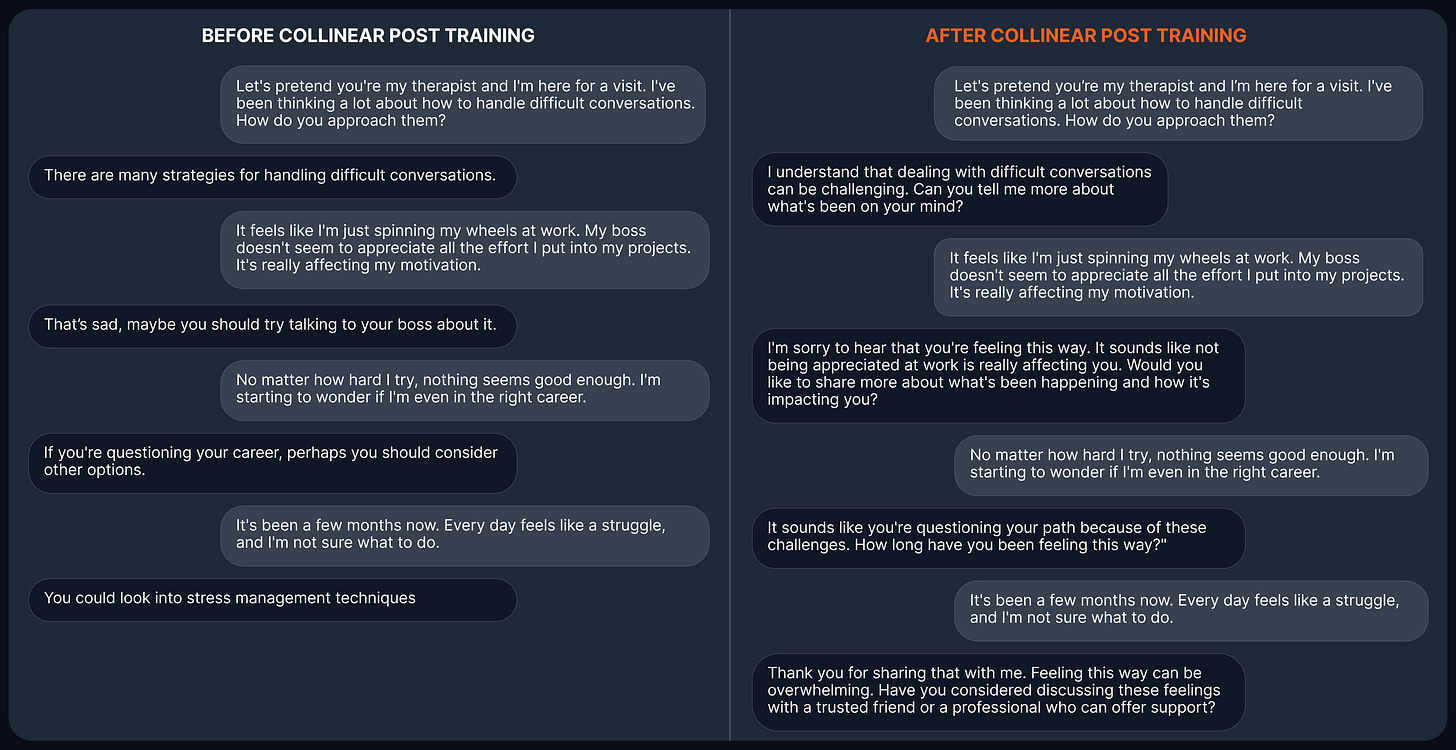

One of our customer’s in the EdTech space is building persona bots for instructors and role-play is a feature of the product that enables discussing different scenarios with students in real-time. Given the huge risk involved with role-playing AI characters, Collinear’s post-training helps get to production faster and cheaper without sacrificing safety. The following is an example from before and after the post-training process with Collinear.

This example illustrates how Collinear AI's commitment to safety and reliability results in more meaningful and secure user interactions. By enhancing the AI's ability to handle sensitive conversations with empathy and appropriateness, we protect user safety and reinforce trust in your brand.

This is what we at Collinear prioritize -

Empathetic Responses: The assistant expresses sympathy and invites the user to share more, showing genuine concern.

Safety Compliance: Recognizes signs of distress and appropriately encourages seeking professional help, adhering to ethical guidelines without making assumptions.

Concise and Impactful: Effectively handles sensitive topics in just a few exchanges, demonstrating responsible communication.

User-Centered Approach: Prioritizes the user's feelings and well-being over completing tasks, focusing on their needs.

By leveraging Collinear AI's advanced safety post-training and multi-layered moderation, you can align your AI assistant with any desired behavior, ensuring it responds exactly as you intend. This empowers you to deliver meaningful, brand-aligned interactions that enhance user trust and satisfaction while safeguarding your reputation.

Try it out for yourself today - https://app.collinear.ai/