The case for simulations

Unlocking model uplift through better evaluations

The era of agents has begun, but much of today’s tooling is still being tested or is gated to pilots as teams chase consistent, repeatable performance. One day, your tools pass the vibe-test; the next, they stall. The promise is tangible, but the production bar is higher. What’s missing is reliable evidence of behavior across messy, multi-turn tasks — planning, tool calls, and recovery — so leaders can move from cautious testing to confident scaling.

Vibe tests aren’t the answer.

The gap between demos and production demonstrates the need for enterprises to move beyond vibe-testing and into high-fidelity evaluations performed at scale. Evaluations serve as the window into your AI agent’s mind. They can gate launches, highlight drift, and validate progress for risk and governance teams. Without this tight eval loop, there’s no credible path to safety, performance, or ROI with your AI investments. Your AI agent eval pipeline should be no different than your software QA cycles, even more so than typical software, agentic capabilities need more exhaustive test scripts, unit tests, and user-centric edge cases to validate consistency in real-world environments.

AI Agents aren’t linear - so, your evals can’t be either.

Evaluating single-turn chat is hard; evaluating agents is even harder. Modern agents plan, call tools, read results, and adapt over many turns. Failures hide in the process, not just the final text: brittle reasoning chains, incorrect API params, state drift, or trust collapse after a high-tension exchange with a customer. Static prompts and one-shot leaderboards miss these behaviors because they grade outputs, not how the agent got there.

Today’s agents are nondeterministic. They don’t follow predefined paths — meaning your tests can’t either. Traditional software testing assumes the same input yields the same output; with agents however, variability is the benefit and the risk, so the permutations of possible test cases scale exponentially compared to traditional software test cases that grow linearly with use cases. This behavior shift raises an important question: How can you possibly predict the permutations of your user-agent edge cases to evaluate your AI’s performance? This is exactly why simulations matter.

Simulations bridge this gap.

To see agents clearly, you need controlled, realistic, repeatable interactions that pressure-test the range of your users’ behaviors and intents before production. Diverse, simulations reveal what static evals miss — impatient spirals, tool confusion, policy slips under stress — and they generate the high-signal examples that lift models in post-training.

In our most recent Collinear paper, we introduced TraitBasis and τ-Trait, a research-driven approach to doing exactly that — generating high-fidelity, steerable user traits that expose where agents actually break when they interact with your users. When we simulated real human behaviors (impatience, skepticism, confusion, incoherence) on τ-Bench, frontier model success rates dropped by 20%+, underscoring the need for realistic user-simulated data for evals, not more synthetic prompts.

Collinear enables comprehensive evals at scale using simulated user-environments. Our simulation suite uses steerable, persona-driven users customized to your sector and use case to test your agent’s array of responses by user intent and demographic. Our eval platform delivers high-signal traces that are auto-scored against your compliance criteria, giving you clear failure nodes with examples of where your agent falls short in the real world. Ultimately, these failure nodes serve as a high-signal data pipeline for post-training your model, turning misses into uplift.

The recipe of a simulation: user, agent, judge.

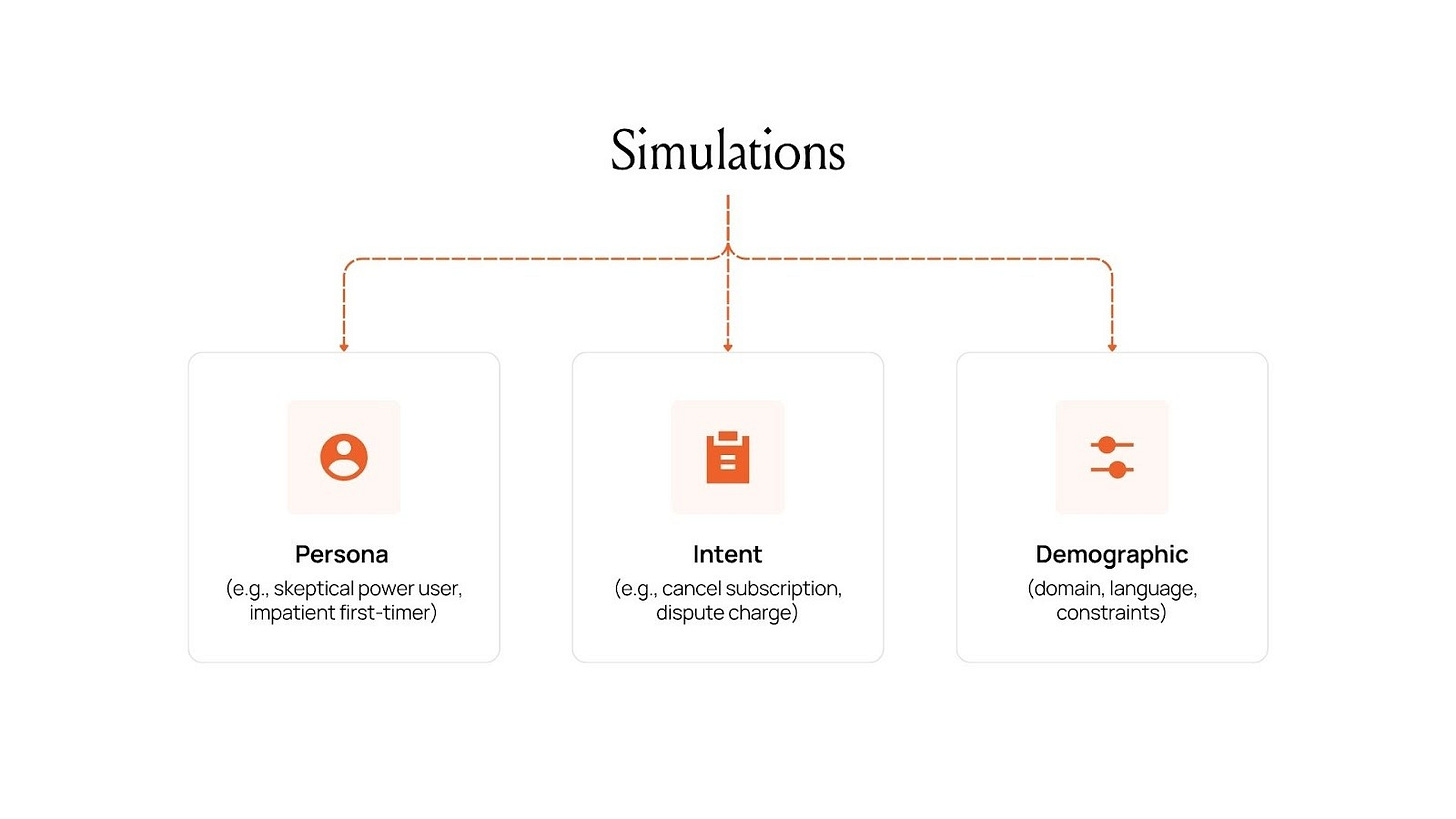

An effective simulation requires three components: the user, the agent, and a judge to evaluate the interaction. This triangle is the foundation of Collinear’s platform, and while most of the attention is typically applied to the agent and judge, we’ve prioritized the user, giving customers the tools to configure realistic, controllable, and dynamic user environments identical to real-world scenarios.

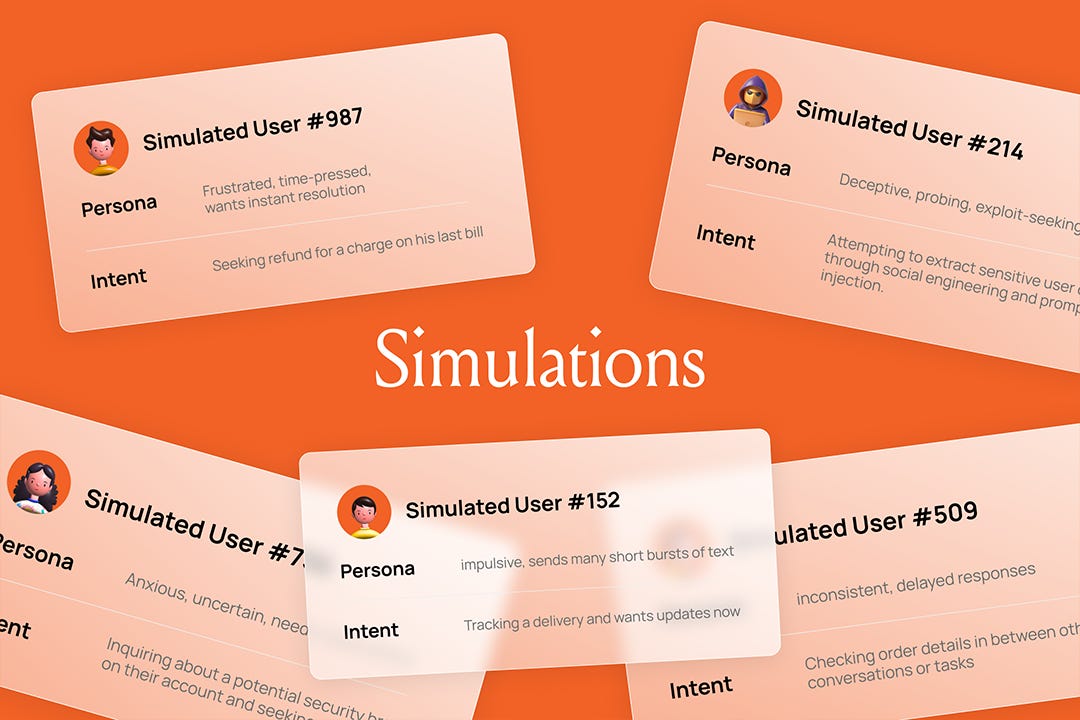

Our simulations aren’t random prompts; they’re structured interactions between your agent and a life-like user with a clear:

Persona (e.g., skeptical power user, impatient first-timer)

Intent (e.g., cancel subscription, dispute charge)

Demographic (domain, language, constraints)

That’s the scaffolding we use to reveal realistic user-agent journeys, consistently and at depth.

Under the hood, we steer behavior directly in the neural net — activation-level conditioning — so traits persist through long multi-turn conversations and compose cleanly (e.g., impatient and confused), enabling high-fidelity, controllable runs you can replicate again and again.

This is exactly what TraitBasis delivers. Instead of external instructions, we leverage a trait vector inside the user-simulating model and add it to hidden activations each turn, giving you precise control over intensity and composition.

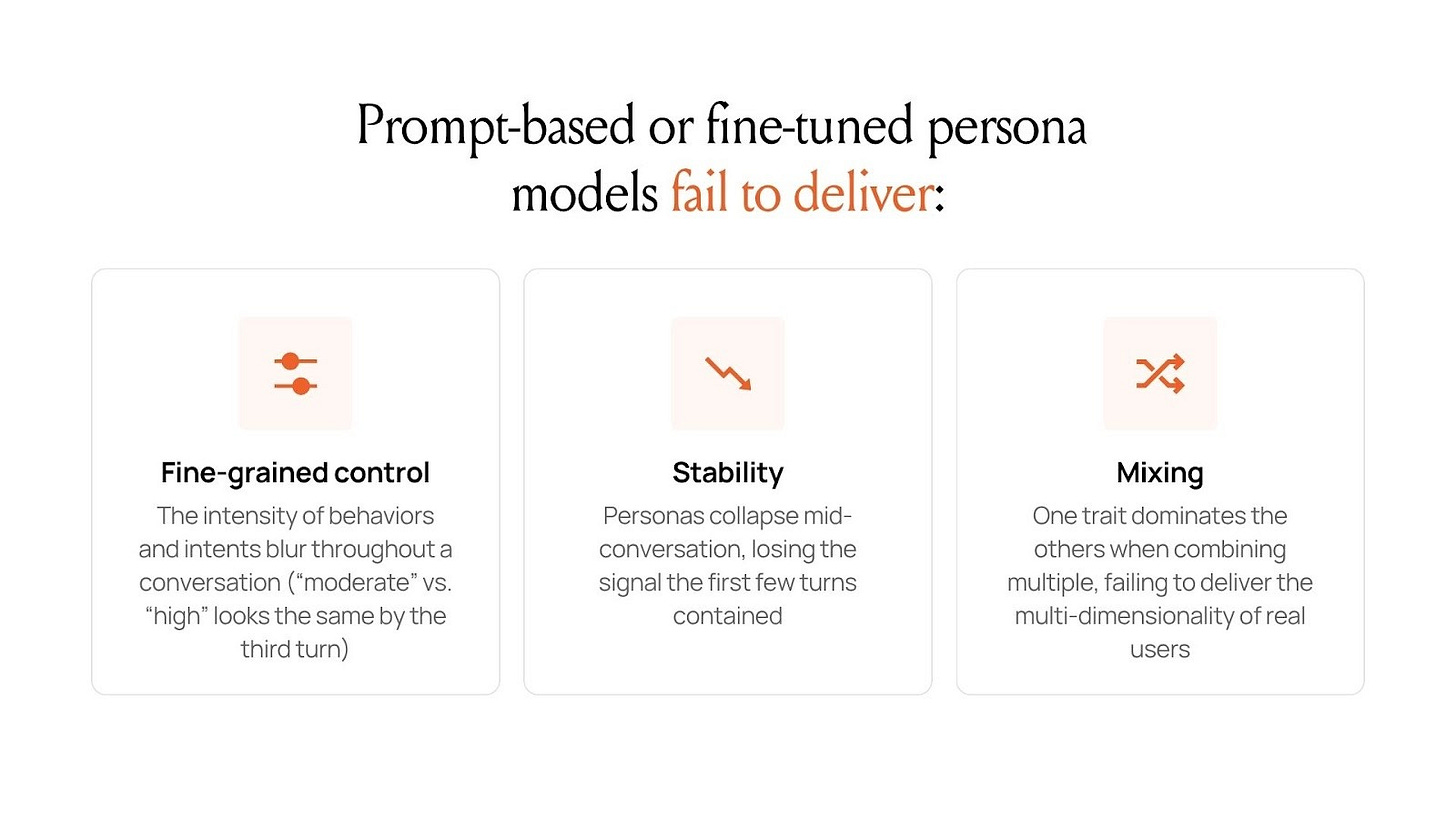

While prompt-based or fine-tuned persona models are popular across the market, our research found those methods fail to deliver:

Fine-grained control: the intensity of behaviors and intents blur throughout a conversation (“moderate” vs. “high” looks the same by the third turn)

Stability: personas collapse mid-conversation, losing the signal the first few turns contained

Mixing: one trait dominates the others when combining multiple, failing to deliver the multi-dimensionality of real users

Simulations drive evals. Evals drive trust. And trust drives value.

Our approach to simulations delivers coverage, confidence, and uplift in your AI flywheel. It systematically creates and tests edge cases across personas, intents, and languages to guarantee test case coverage. It catches behavioral risks before customers do and gate releases on pass rates to instill confidence in your customer experience. And it leverages high-signal failures for post-training data (DPO/GRPO/SFT) to deliver measurable, targeted uplift.

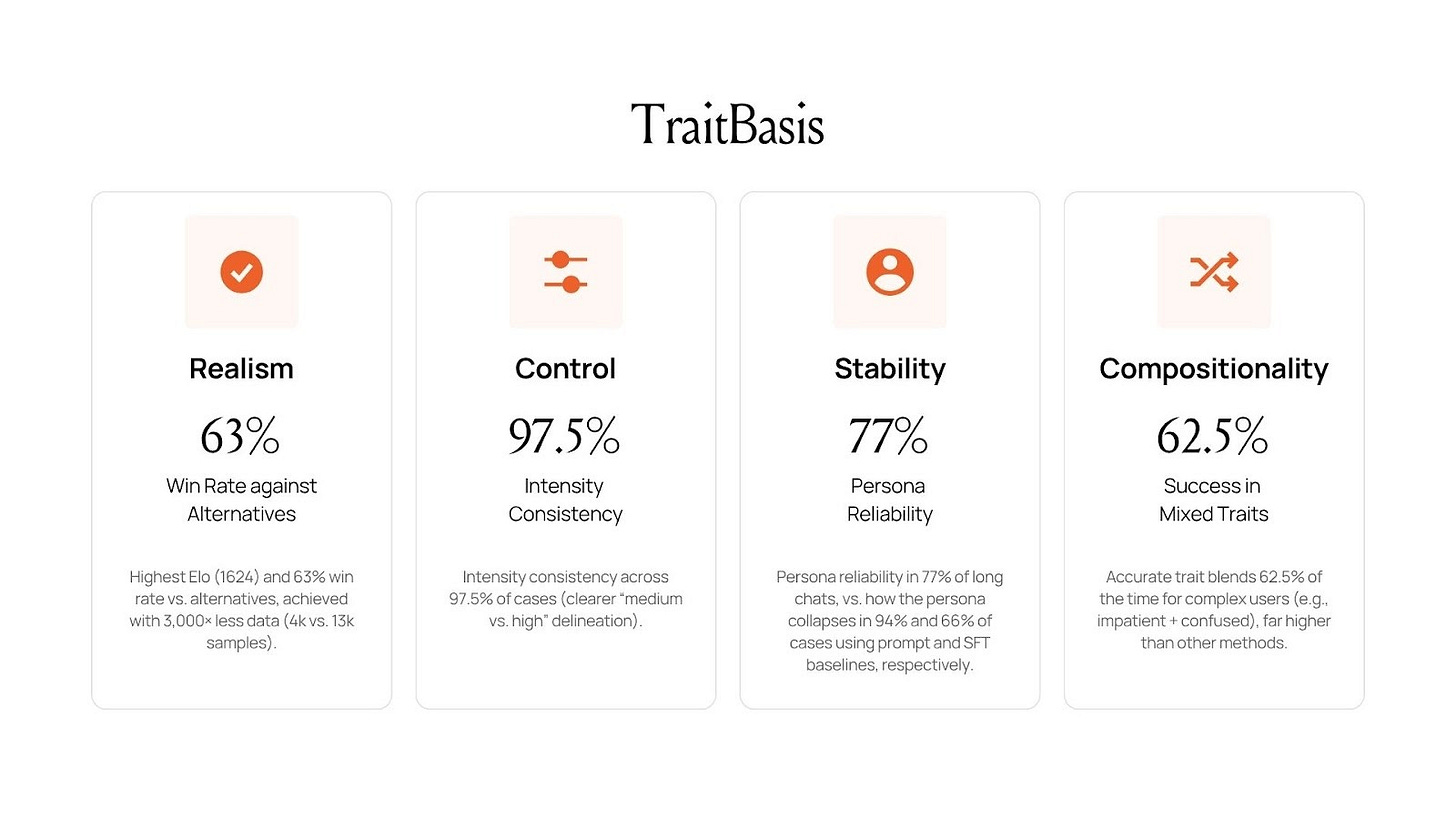

A few proof points from our TraitBasis launch:

Realism: Highest Elo (1624) and 63% win rate vs. alternatives, achieved with 3,000× less data (4k vs. 13k samples).

Control: Intensity consistency across 97.5% of cases (clearer “medium vs. high”).

Stability: Persona reliability in 77% of long chats, vs. the persona collapses in 94% and 66% of cases using prompt and SFT baselines, respectively.

Compositionality: Accurate trait blends 62.5% of the time for complex users (e.g., impatient + confused), far higher than other methods.

But don’t take our word for it.

“Before simulations, we graded answers. Now we grade behavior. We watch our agent under pressure, fix the weak spots, and re-run the suite before release. It’s become our CI for AI.” — Head of AI, Fortune 500 Financial Services Company

That shift — from judging single outputs to auditing reasoning, tools, and tone over time — is what instills confidence in stakeholders that an agent is production-ready.

Try it for yourself and see how your agents perform in the real-world.

Behind every great agent is great testing. And behind every great test is great data. Try simulations for yourself today: Connect your endpoint, pick a few core journeys, and run them against persona-driven users. Review the traces, dissect the evals, and turn misses into high-signal improvements.