OpenAI's gpt-oss on LiveCodeBench: A Competitive Programming Deep Dive

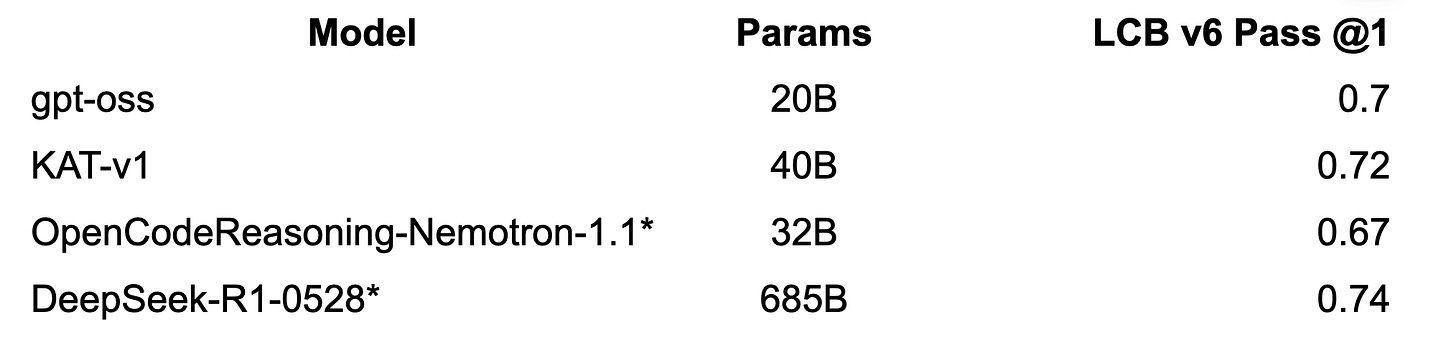

tl;dr: the gpt-oss-20b is a strong model for competitive coding but is >3x sample inefficient compared to deespseek-r1-0528

OpenAI finally released an open-weights model, the gpt-oss. Their last open-weights release was GPT2, back in 2019!

The gpt-oss comes in 2 sizes, the 20b and the 120b. Both are mixture-of-experts (MoEs), instruction-tuned for reasoning and agentic tasks. You can control the reasoning levels of the model and switch between low, medium, and high reasoning modes.

The OpenAI blog post claims both the models get ~98 on AIME 2025 (competitive math benchmark). There is no documented performance of the gpt-oss on competitive coding. We benchmarked the model on LCB and analyzed its performance, including the reasoning quality.

The gpt-oss-20b gets 70 pass@1 on LCB v6 (Aug 1, 2024 to Jan 31, 2025) with high reasoning mode for 3 samples per problem. We set the max sequence to 64k tokens. The total number of problems in LCB v6 is 323, with 79 classified as easy, 102 as medium, and 142 as hard on the difficulty spectrum.

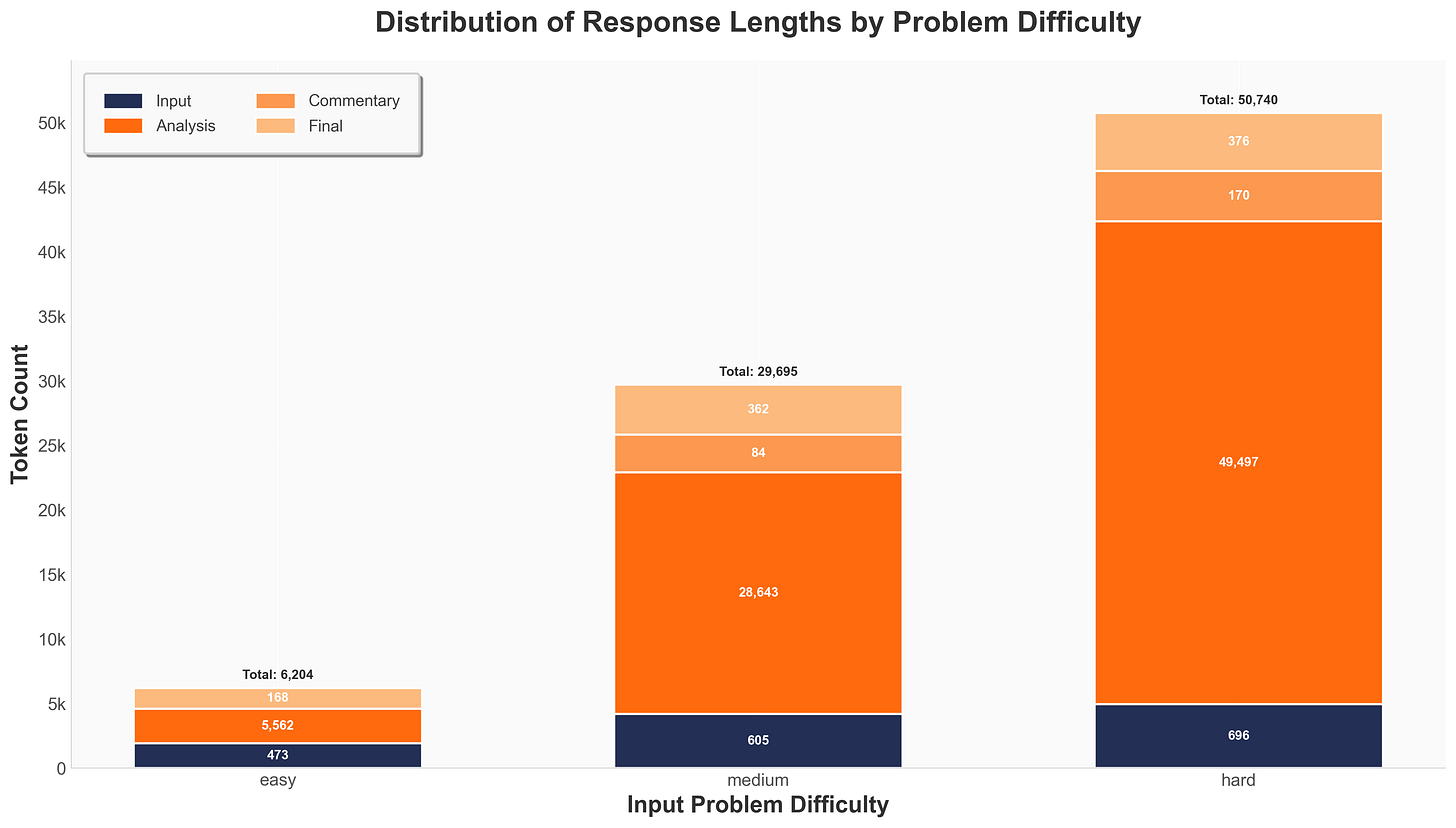

All gpt-oss responses are structured into 3 blocks: <analysis>, <commentary>, and <final>. The following plot shows the distribution of the model’s response length across the three blocks vs. the input problem length across the three difficulty levels.

The model is very sample-efficient on the easy problems, while it is extremely sample-inefficient on the difficult problems. The DeepSeek-R1-0528 has an average of 15k tokens on the difficult problems, making gpt-oss-20b more than three times verbose.

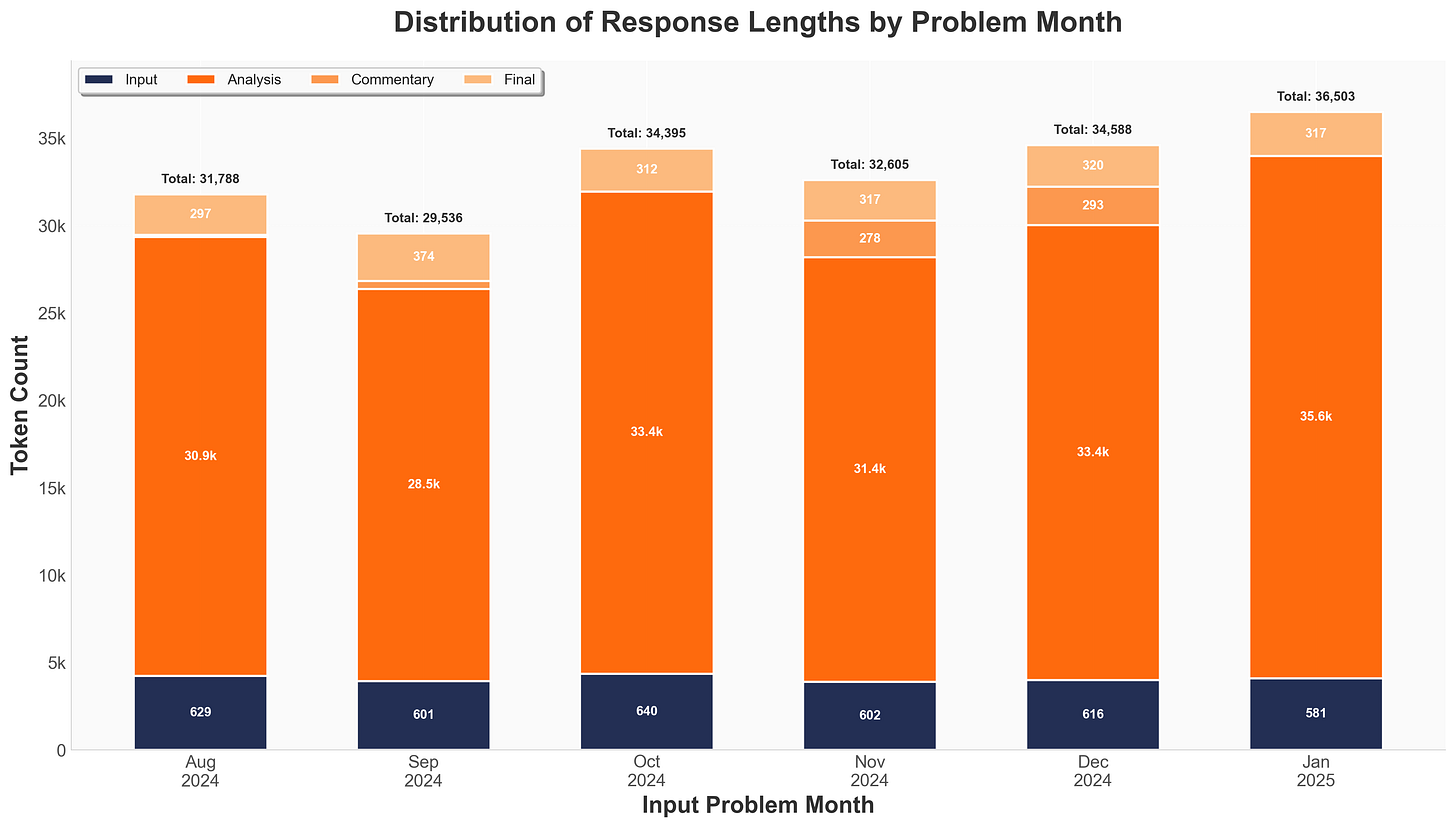

Distribution of the model’s response length across the three blocks vs. the input problem length across the eval problem months. The response length is approximately uniform across the different months.

The awaited open-weight OpenAI’s gpt-oss-20b model is good at competitive coding, given its size, but is very verbose compared to similarly performing models.

References:

Kwaipilot KAT-v1-40B

NVIDIA OpenCodeReasoning Nemotron

Deepseek R1-0528

What prompt you have used? What was the inference setting (e.g., temperature, top-p)?