Introducing Curator Evals: A Benchmark for High-quality Post-training Data Curation

High-quality datasets are the foundation of better language models. Post-training methods like supervised fine-tuning and RLHF heavily rely on carefully curated data and reward models, but what curator is good and data quality is an open question.

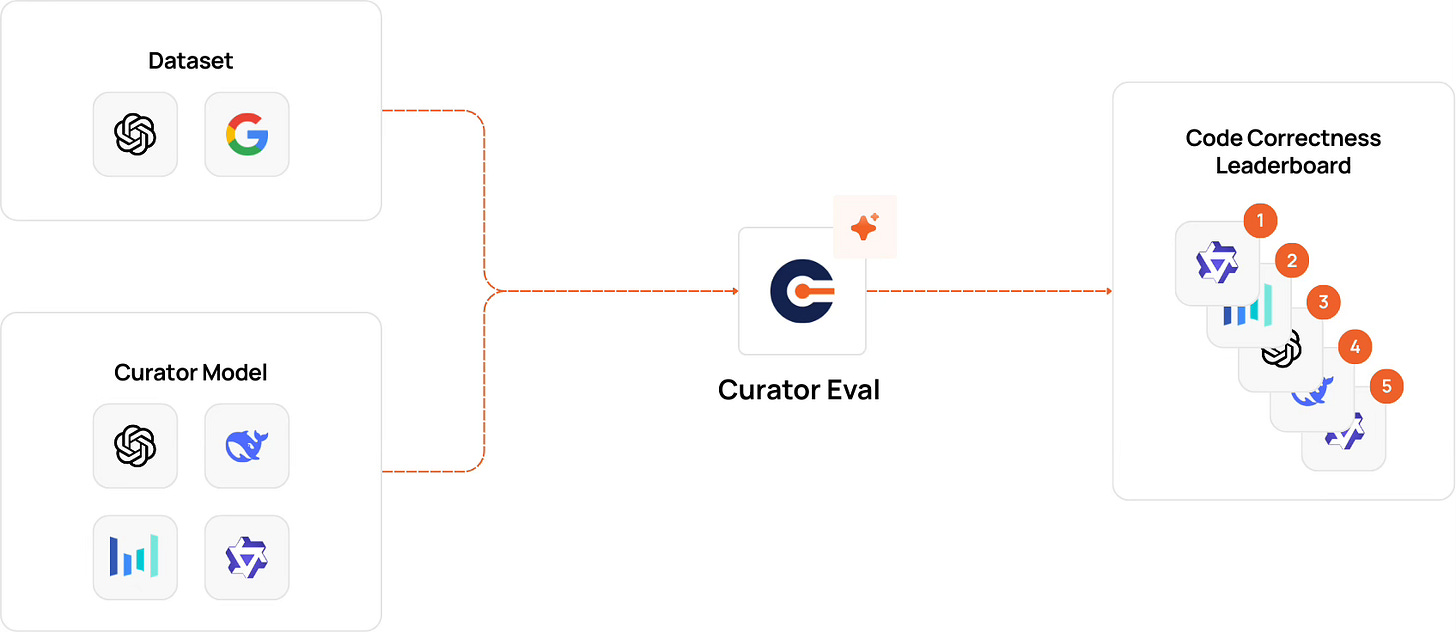

At Collinear, we built Curator Evals: a benchmarking and evaluation library designed to systematically measure the performance of curators and reward models.

Key Features

Task-Specific Evaluations: Evaluate models on code correctness task. (other tasks such as math correctness, coherence, and similar desirable data qualities are expected in later versions).

Flexible Model Support: Works with LLMs on various platforms.

Local inference with vLLM.

OpenAI API for GPT models.

Together AI for open-weights model hosting

Detailed Metrics: Provides accuracy scores and structured JSON outputs with component-level breakdowns (e.g., responses, scores).

Command-Line and Python API: Run quick CLI commands or integrate programmatically in user workflow

Intelligent Input/Output Processing

The framework comes with robust prompt formatting and output extraction tools tailored for each evaluation type.

Input Formatters handle task-specific prompt construction:

code_correctness_prompt = Template("""You are a helpful assistant tasked with evaluating the correctness of a code output .....

Respond in the following format only:

[RESULT] <1 or 0>

Do not include any explanations or additional text.

## Input Question:

{{prompt}}

## Code Output to Evaluate:

{{response}}

""")Output Formatters extract structured results from diverse response formats:

def _extract_code_qwen_output(text: str) -> int:

"""Extract output from Code Qwen model response"""

return _first_digit_after_key(text, "[RESULT]")Quick CLI Evaluation

# Install

conda create -n curator python=3.11 -y

conda activate curator

git clone https://github.com/collinear-ai/curator-evals.git

cd curator-evals

pip install uv

uv pip install -e .

# Run evaluation

curator-evals --task code_correctness \

--model meta-llama/Meta-Llama-3.1-8B-Instruct-Turbo \

--model-type llm \

--use-server \

--server-url None \

--provider togetherai \

--api-key $TOGETHER_API_KEY \

--input-format code_correctness_prompt \

--output-format collinear_code_qwen_judge \

--debugBenchmarking Details

The Curator Evals benchmark for code correctness is based on a curated dataset on Hugging Face Hub at collinear-ai/curator_evals_bench which consists of coding problems from two well-known benchmarks:

HumanEvalPack: Uses "correctness preference pairs" to test a model's ability to judge which of two code solutions is better.

MBPP (Mostly Basic Programming Problems): Includes a subset of its ~1,000 Python problems, where the task is to verify the correctness of a provided solution against automated test cases.

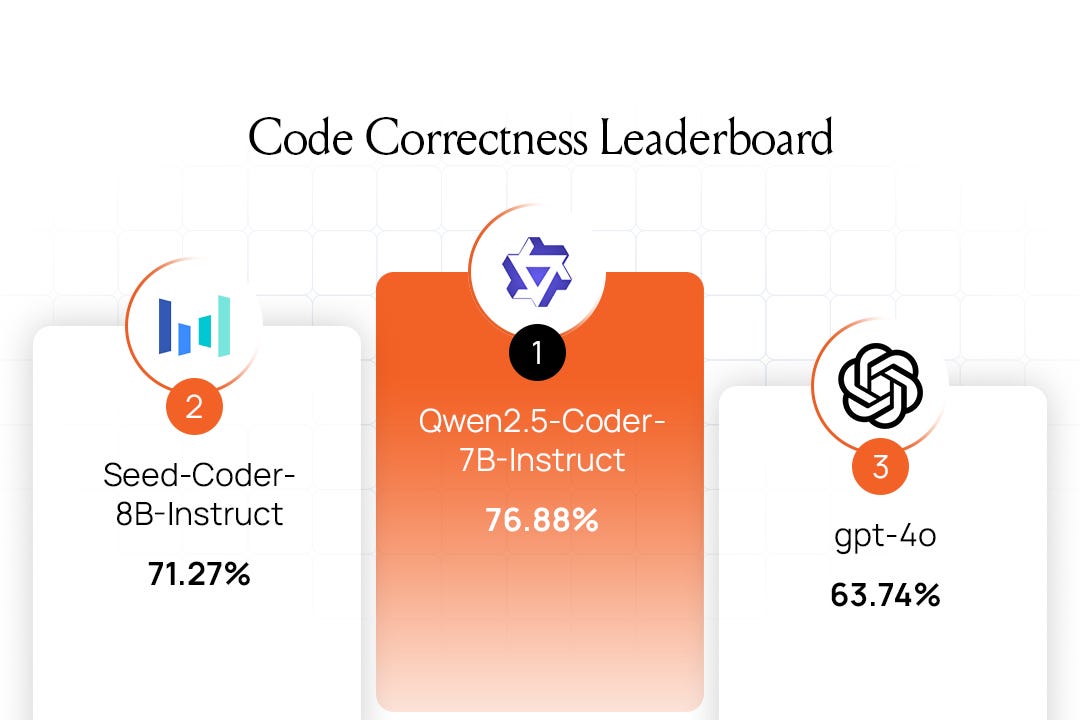

LeaderBoard

The leaderboard ranks models on the code correctness task using the Curator Eval Bench. Each model is given 1302 coding problems and evaluated on whether its generated solutions pass correctness checks, using curated prompt formatting and structured output parsing. This reflects the post-training quality, especially for code generation.

Discussion

Specialization Over Generalization: The results show that models specifically fine-tuned for coding excel at these tasks and prove that focused training can be more effective than a broader, general-purpose approach.

Data Quality Versus Model Size: The top-performing models are relatively small (7B-8B parameters), demonstrating that the quality of training data and post-training methods can be more critical for performance than model size.

Conclusion

Curator Evals is a step towards better curators and reward models. It enables AI practitioners to select the right curators across diverse model architectures and deployment scenarios for their tasks. Better curators and reward models lead to better data quality, and ultimately, better AI.

Ready to improve your AI’s performance?

Let’s talk about how Collinear can help you automatically

assess and curate post-training data for improving your AI.