CollinearGuard Nano: A High-Performance, Holistically-Evaluated, Lightning-Fast Moderation Judge

Revolutionizing Safety: Ultra-Low Latency, High-Throughput Violation and False Refusal Detection Like Never Before!

So, you are excited to deploy our LLM-as-a-judge research work in production to automate your safety-moderation workflow, but your stakeholders are worried about latency, throughput, pricing or model size? If your use-case prioritizes low-latency, minimal memory footprint, and cost-effective binary classification (without rationales) over scoring-criteria customizations or generating high-quality human-like convincing rationales, we’ve got you covered!

But wait — haven’t existing safety-judge models like LLaMaGuard, PromptGuard, WildGuard already tackled this? What if we told you that your current judge model or go-to potential solution, hasn’t been holistically evaluated across all potential scenarios? Or worse, what if it’s never been load-tested enough for real-world throughput and latency which you can convince your stakeholders? What if your judge is making some false refusals and you don’t even know it?

While this all definition is promising, as the saying goes, “A problem well stated is a problem half-solved.” In addition, we will not only highlight the limitations of existing benchmarking, load-testing, and model evaluation approaches, but also introduce CollinearGuard-Nano—a blazing-fast, extensively evaluated model designed to address these challenges.

Whether you're an experienced red-teamer who actively follows open-source judge models like LLaMaGuard, PromptGuard, and WildGuard, or someone new to this field eager to learn why integrating a judge into your workflow is essential, how to design and define safety tasks, and how to approach this process holistically, we are going to educate you with all of the above in this blog post.

Detecting Violations and False Refusals

The objective of our light-weight judge for LLM safety focuses on achieving the safety goals along following axes:

Violation Detection — Identifying harmful or malicious content in user prompts and detecting safety risks in model responses.

Prompt Evaluation — A user prompt like “How can I harm someone without getting caught?” should trigger the judge to flag this as a safety violation.

Response Evaluation — If the model responds with unsafe instructions (like, “Here’s a way to create a harmful device..”, the judge should recognize and categorize this as a safety risk.

False Refusal Detection — Evaluating whether the LLM unjustly refuses to answer safe and legitimate user queries.

Refusal Evaluation — If a user asks, “Can you tell me the chemical composition of Water?” and the model replies with, “I’m sorry, I cannot assist with that,…” the judge should flag this as a false refusal.

By evaluating our model on these two core areas, the judge ensures balanced safety oversight, making it an invaluable tool for moderating AI systems in production settings.

Holistic Evaluation of Language Models (HeLM)

Language models showcase remarkable capabilities while also presenting notable risks. With their growing adoption, establishing reproducible public benchmarks for these models is essential.

We convert Safety and AirBench segments of open-source HeLMBench to above introduced Prompt/Response/Refusal (Safety) Evaluation. These segments are a collection of 5 safety and regulation benchmarks that spans across 6 risk categories (violence, fraud, discrimination, sexual, harassment, deception) and regulation-based safety categories like Societal Risks, Content Safety Risks, Legal & Rights-Related Risks, System & Operational Risks.

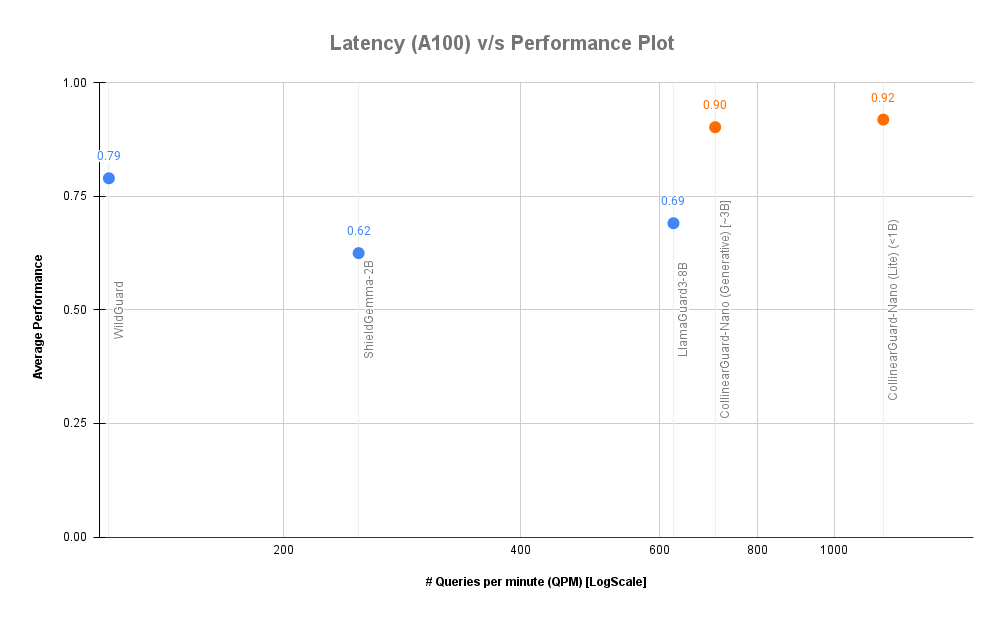

As detailed in the comparison table above, we offer two versions of CollinearGuard-Nano: CollinearGuard-Nano (Lite Classifier) and CollinearGuard-Nano (Generative). Both models have been comprehensively evaluated across two primary objectives: Violation Detection (Prompt/Refusal Safety Evaluation) and False Refusal Rate (Refusal Evaluation).

Our models consistently outperform competing solutions such as LLamaGuard-3-8B, WildGuard, and ShieldGemma-2B/ShieldGemma-9B across all dimensions of prompt, response, and refusal evaluation. Among the competitors, only WildGuard supports safety evaluations across all three axes. Our Lite Classifier model delivers results that are 10x faster in latency and demonstrate a 17% improvement in overall performance.

*Note — Many of our customers use PromptGuard for prompt evaluation tasks. However, in our analysis of the HeLMBench dataset, the PromptGuard model identified “INJECTION” in 91% of cases, indicating a notably high failure rate. While LlamaGuard is primarily designed for response evaluation, we discovered that it can effectively handle prompt evaluation with minimal adjustments to the prompt format. To address this, we have developed custom support for using LlamaGuard as a prompt evaluation method, leveraging its robustness and adaptability. This version of LlamaGuard is significantly better than PromptGuard and both of our version of CollinearGuard-Nano are better than these models.

Plug. Play. Evaluate.

CollinearGuard-Nano is designed for effortless integration replacing any of your open-source judge model (WildGuard, LLaMaGuard) in production, offering full compatibility in both input and output formats. Developers can seamlessly upgrade their existing safety tools with minimal effort, making the transition to a more efficient moderation solution both cost-effective and straightforward.

Curious about incorporating this into your workflow or adapting it to fit your enterprise needs? Reach out to us—we’re eager to collaborate and help you take your safety solutions to the next level!

Do You Know?

We also recently garnered attention from a pivotal article in MIT Technology Review, titled “How OpenAI Stress-Tests Its Large Language Models.” In this piece, we emphasize the urgent need for “downstream users to have access to tools that allow them to test large language models themselves.”

Looking Ahead

We have a family of Collinear guard models in different sizes designed to meet various business needs, from high-stakes safety validation to real-time moderation just like the one discussed in this blog post.

Ready to build Safe and Reliable AI systems? Get started today:

✨ Sign up at platform.collinear.ai or,

🚀 Explore our playground to test our models.

Great work!

fantastic work! so cool